2021 was, in many respects a tough year, if anything because of the pandemic, to state the obvious. Despite this, it was also a productive year for research. I got to advance my goals of democratising tensors for better AI and to develop new methodologies to improve our models and their training. So here are a few of this years achievements, a retrospect on 2021 and looking ahead to 2022, which may well be the year of the tensor!

TensorLy: Democratising Tensor Methods for All

Tensor methods generalise matrix algebraic methods to more than 2 dimensions and tensor algebra is a strict superset of matrix algebra. They're a powerful tools (the next big thing to quote none other than Charles Van Loan!) but typically can come with a steeper learning curve.

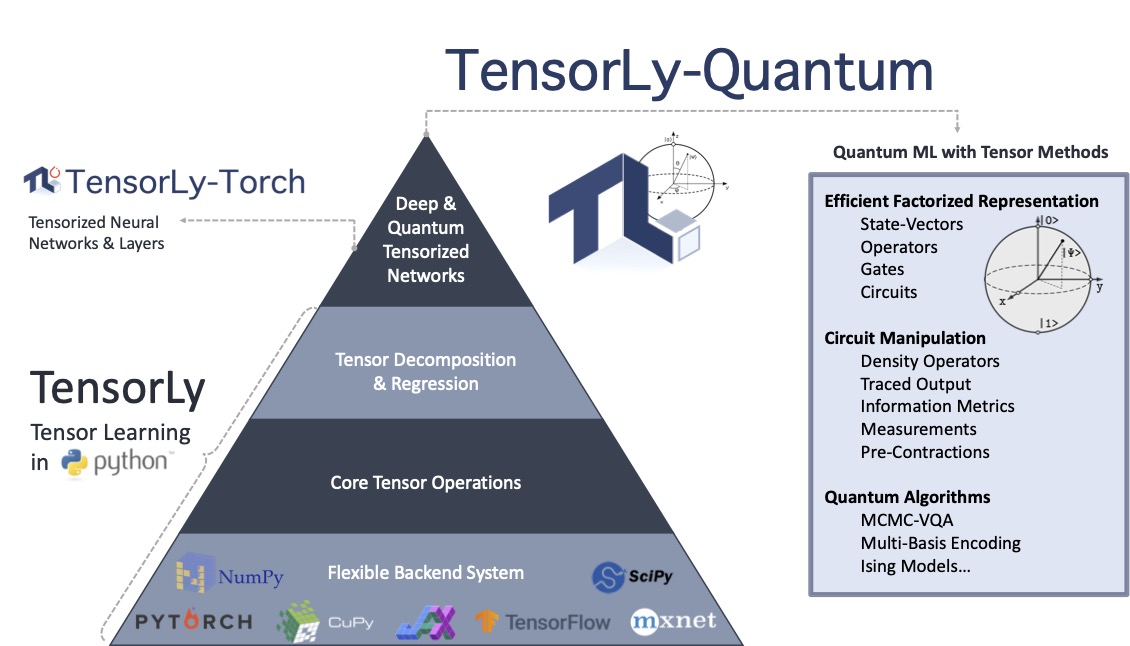

With TensorLy, we aim at making them easily accessible through a simple, high-level Python API. It comes with a flexible backend system that allows anyone to use their favourite framework to run the computation, from the universal NumPy to PyTorch, JAX, TensorFlow and MXNet for GPU acceleration.

With the amazing TensorLy dev team, we released several new versions of the library and added many new features!

TensorLy-Torch: Tensorizing Deep Learning

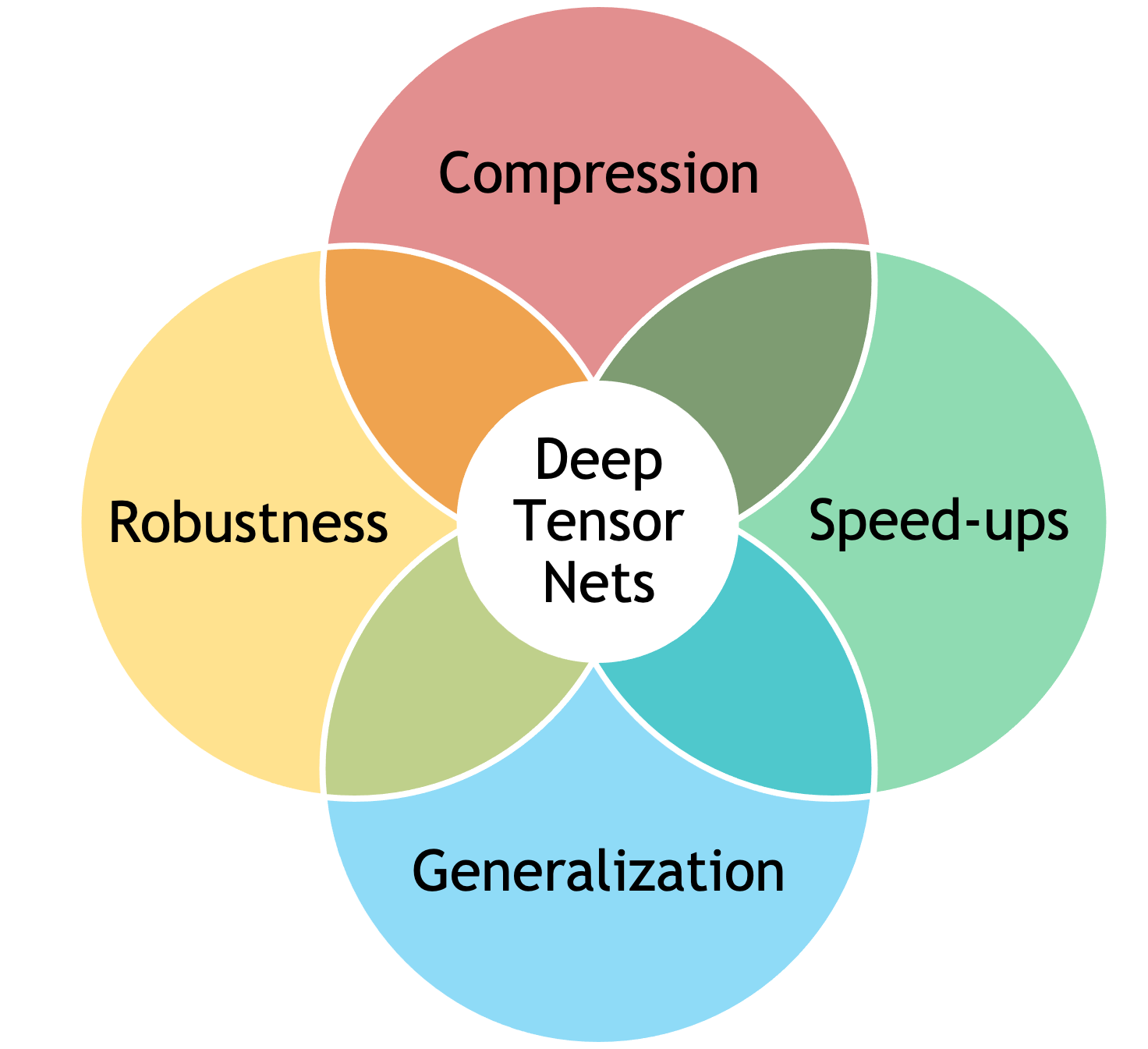

One particularly interesting application of tensor methods is to improve deep learning models, both performance, robustness, compression and speed.

However, doing so requires a lot of expertise, particularly when you want to compose different things, e.g. decomposing existing weights to express them in factorized form, replacing them by more efficient operations using directly the factors of the decomposition and adding things like tensor dropout. Training from scratch is no walk in the park either if you're not careful, and initializing correctly tensor factorizations can be tricky.

To lower the barrier of entry and make it easy, I wrote TensorLy-Torch which was released this year and provides all the above, and more, out of the box. You can manipulate transparently tensors in factorized form, define layers using them and add things like tensor dropout or l1 rank-regularization with just a few lines of PyTorch code!

Going beyond supervised learning, tensorization can also help for reinforcement learning. For instance to jointly model the action space of multiple agent in our ICML paper, in factorized form.

Making Neural Networks Robust

Tensor methods give us a rigorous way of studying machine learning methods and in many cases, improve upon existing models. We proposed one way to do so in IEEE Selected Topics in Signal Processing, through tensor dropout, which generalizes matrix dropout to tensors to improve performance.

Based on tensor dropout, we proposed Defensive Tensorization, in BMVC, which greatly improves the robustness of neural networks, especially when combined with adversarial training. Beyond the tensor aspect, randomness is underutilized in ML. In NeurIPS, we also explored more robust training through adversarial composition of random augmentations.

Tensor Methods and Quantum Computing

Tensor methods are a natural tool for quantum computing so it was an obvious application, one that's long interested me. While working on this, we developed Multi-Basis Encoding, a new method that, combined with efficient factorized representation of the states and operators, enabled solving the Ising problem for an unprecedented amount of qubits.

Tensor methods, novel algorithms, factorized representations and quantum circuits all come together in a simple API with autograd support through PyTorch modules for quantum machine learning in a new open-source package, TensorLy-Quantum.

Using TensorLy-Quantum and cuQuantum, we solved MaxCut for a record number of 3375 qubits on 896 GPUs.

Unsupervised Social Media Analysis

When given a very large corpus of data, how do you study the evolution of the topics discussed, without requiring annotations? LDA is one way but prohibitive to run at scale. Going to higher-orders, however, we can do this efficiently with Tensor LDA. We've been working on this in collaboration with Caltech's awesome Professor Alvarez, Postdocs and PhD students - see Sara Kangashlahti SURF project talk. Have a look at our monograph for more detail. This project has been really fun, and we'll have more coming soon!!

Similar underlying techniques can be used to control the generation of GANs, in a fully unsupervised manner. We demonstrated this by proposing a self-training framework for unsupervised controllable generation from a set of latent codes, sampled from a latent variable model. We used a normalized ICA which parameters were learned through a tensor factorization of higher-order moments.

A Review

For a more general overview, in parallel to the code, we wrote extensive documentations and tutorials, as well as a blog post , a few talks, including at GTC and a review of tensor methods in computer vision and deep learning in IEEE proceedings.

Looking ahead

So what does this mean for the year to come? Plenty of exciting research! We've barely started to scratch the surface of what is possible by leveraging efficiently tensor methods, whether in Machine Learning or Quantum Computing.

Stay tuned for more to come. Get involved by forking the TensorLy repository and reach out on Slack ! And if you have comments, let me know, I'd love to hear from you and discuss!

Happy New Year to all and welcome to the Year of The Tensor!